Now in public beta!

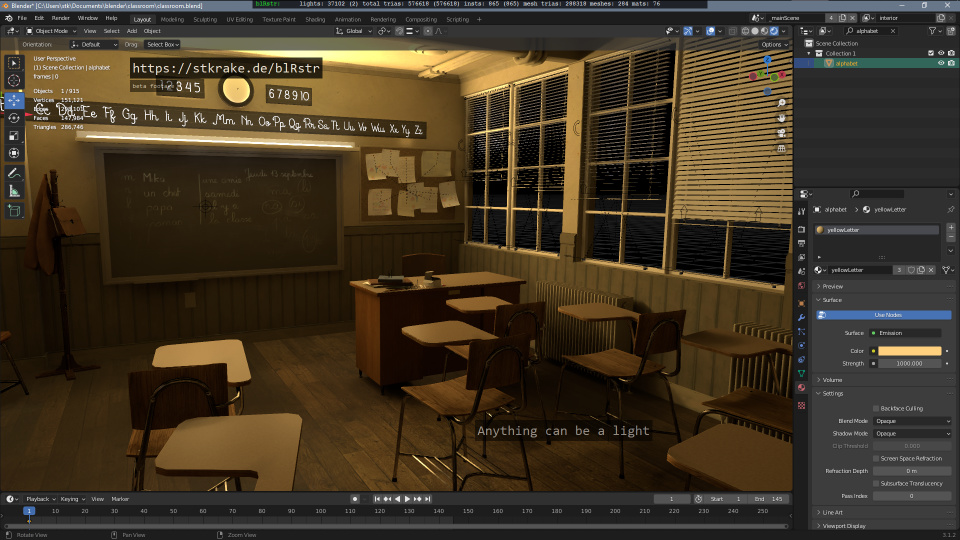

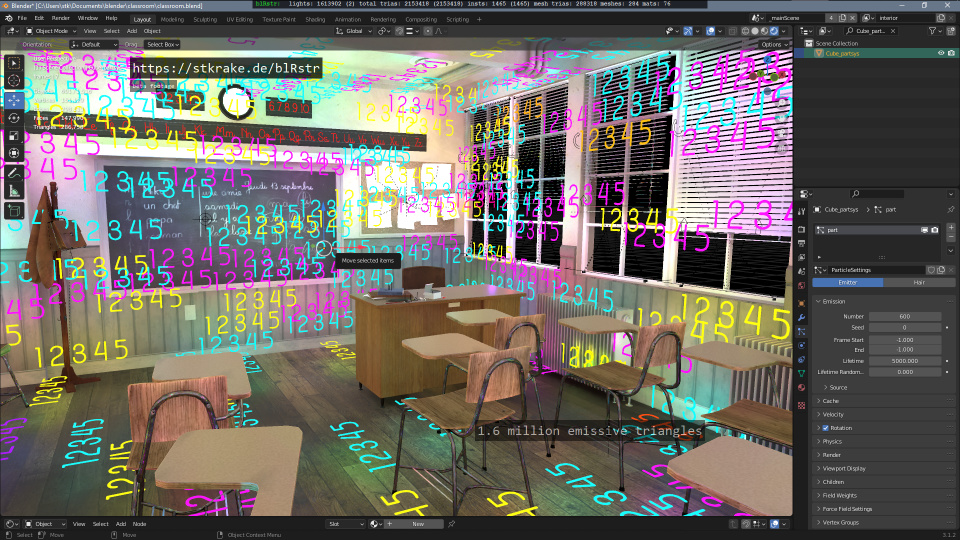

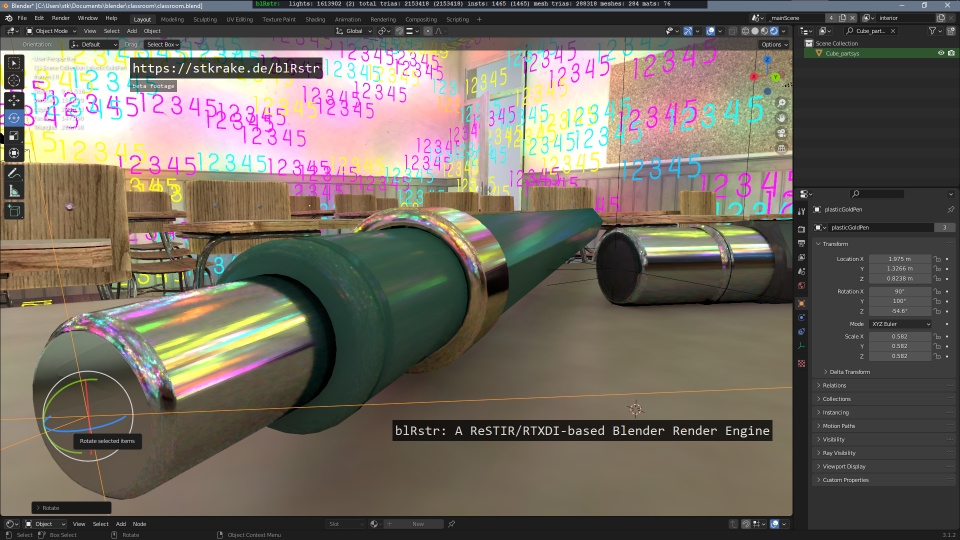

blRstr: A ReSTIR/RTXDI-based Blender Render Engine.

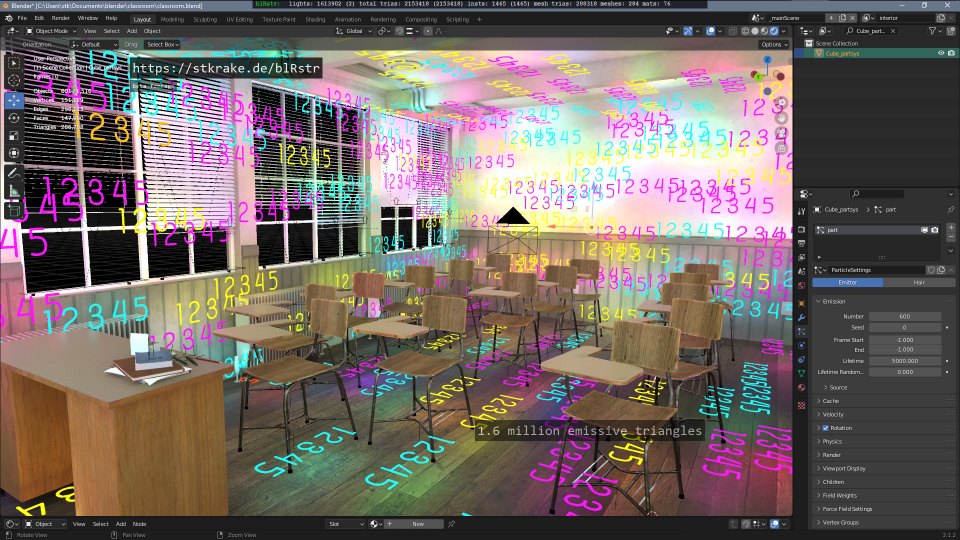

Direct and indirect lighting from tens of thousands of lights with real-time frame rates directly inside the viewport.

- ReSTIR[1]-based lighting. Uses Nvidia's RTXDI[2] implementation for maximum speed and quality.

- Direct lighting of primary surfaces with ReSTIR's spatio-temporal resampling.

- Up to 15 indirect bounces from secondary surfaces accelerated with ReSTIR.

- High quality real-time denoising using ReLAX from Nvidia's NRD[3] SDK.

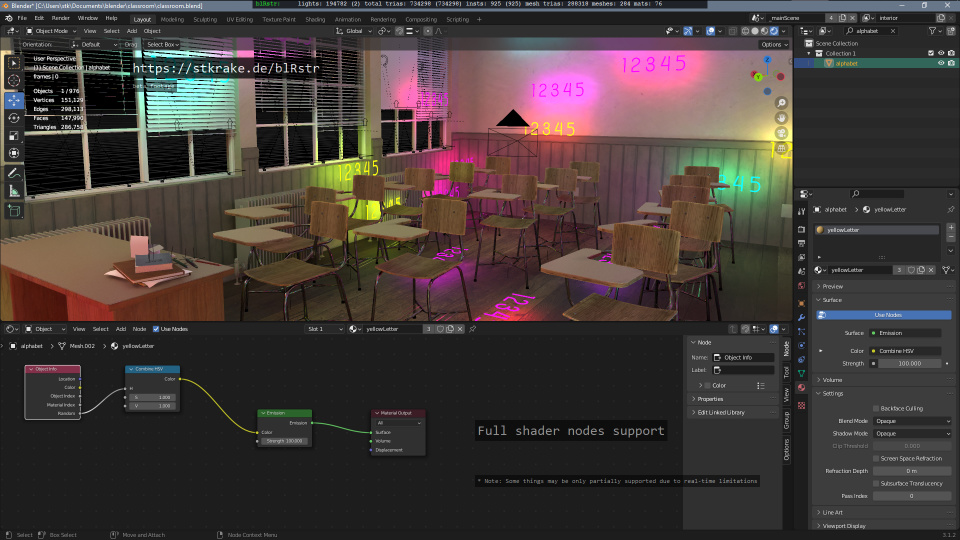

- Supports almost all* surface shader nodes available in Cycles GPU for Material, Light and World.

- Stochastic approximation for Mix/Add Shaders** with special case optimizations. Stochastic alpha. FAQ

- Part commercial (separate executable). Part open source (the add-on, communicates via shared memory). FAQ

- Works with Blender 3.1+ and LTS from 2.93+. No other add-ons required.

- Requires Windows 10+ with DXR 1.1 (Linux/Vulkan planned).

- DXR-capable GPU required. RTX 2070 or higher recommended.

The sources will be published on github when ready.

* Displacement, Volume, Hair and Subsurface are currently not supported. OSL is not supported. Some nodes are partially supported. See detailed list below. Emission shaders have some real-time limitations. See FAQ entry below. Does not support motion blur.

** Mix/Add Shaders and translucency are approximated. See FAQ entry below.

FAQ

- What's the use case?

The primary intent is a very fast viewport renderer, that is able to show a good approximation of the final direct and indirect lighting in real-time. It is designed to be used alongside other viewport renderers.

- How good is the compatibility with Eevee and Cycles?

Very good. Most nodes reuse the original GLSL code from Eevee, sometimes slightly adapted for HLSL. If no GLSL implementaton is available, adapted OSL code from Cycles is used.

- What are the limitations of emission shaders?

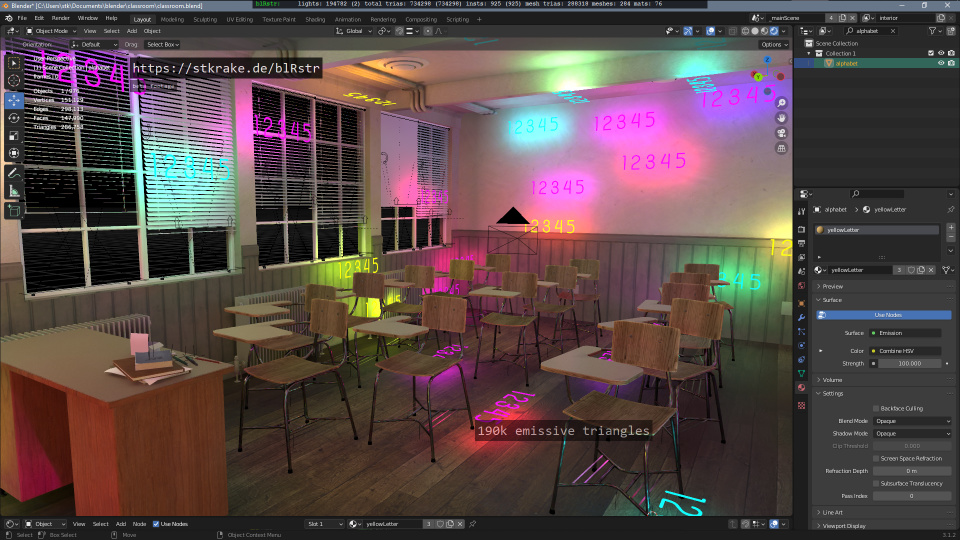

TL;DR: Changes of emission over the surface of a light are ignored. There may be feasible workarounds. World Node is not affected by this.ReSTIR must sample the lights quite often ("candidate samples") to find the most important one. Evaluating the emission shader (or the emission input of other nodes) every time is prohibitively slow. So per light one radiance value is presampled. Triangles are approximately integrated with the anisotropic texture sample method used in the RTXDI example, which works quite well if emissive input comes from an Image Texture (for which blRstr calculates mip-maps), primitive lights are sampled at uv(0,0).

But this also means changes of emission over the surface of the triangle/light are ignored. For geometry such changes can be approximated with subdivided meshes (but not at all for primitive lights).

This is unfortunately a real-time limitation. But there may be feasible workarounds:- If emissive input comes directly from an Image Texture, sample the texture directly.

- Presample the shader into a texture. Users would maybe need to specify a texture resolution for this. And it obviously has limitations regarding shader permutations.

- Give users a switch to enable "prohibitively slow sampling". With few lights it may still be usable.

- What are "Stochastic Mix Shader" and "Stochastic Alpha" approximations?

TL;DR: Mix/Add Shaders and alpha are approximated with randomly chosen pixels. Some special cases have better solutions.Mix/Add Shaders require separate render passes. Since ReSTIR and the denoiser rely heavily on screen-space information, several screen-space buffers would be needed for all mixes in the network. While this may be implemented in the future, currently two performant workarounds are available:

- Special case optimizations: Many Mix Shaders produce values for unrelated shader inputs (e.g. diffuse plus emission). These values can be fed into one shader and rendered in one pass. blRstr recognizes currently these cases: [Any]+Emission and Diffuse+Glossy

- Stochastic mixing: The both passes are randomly distributed between pixels. With probabilities driven by the Fac-value.

This can be approximated with stochastic alpha: On a hit it is randomly decided whether the hit is rendered or the ray travels further. With probabilities driven by the alpha value.

A special exception is Blenders Transparent BSDF node. They result in a simple color multiplication and does not need screen-space. - What does "part commercial" "part open source" exactly mean?

The core renderer is commercial and comes as a separate executable. The Blender add-on is open source (mostly python). It starts the core executable in its own process and communicates via shared memory.

- Could it be used for production rendering?

Maybe. If the quality is good enough for a production it could certainly be used as a final renderer. People are using game engines for production rendering now, and they seem to be happy with the very limited lighting capabilities. Expect game engine quality with much, much better lighting.

- Why is it so much faster than anything before?

Call it scientific and/or technical progress. Without real-time ray tracing there was no real need for very fast light sampling. Then the inventors of ReSTIR[1] looked at Monte Carlo methods previously not used in path tracing and found a way to improve it vastly (at the expense of some precision). And the RTXDI[2] and NRD[3] developers made it production-ready.

- Is it Nvidia only?

No. It should run on any hardware supporting DXR 1.1 (or the Vulkan Ray Tracing Extensions, see below). Practically there are currently not much devices from other vendors available or in use, so this is not really tested.

- Does it run on Linux?

This is planned. A Vulkan-based version is technically possible and doable.

References

1. Benedikt Bitterli, Chris Wyman, Matt Pharr, Peter Shirley, Aaron Lefohn, Wojciech Jarosz. Spatiotemporal reservoir resampling for real-time ray tracing with dynamic direct lighting. ACM Transactions on Graphics (Proceedings of SIGGRAPH), 39(4), July 2020. DOI:https://dl.acm.org/doi/10.1145/3386569.3392481. Link to author's version.

2. RTX Direct Illumination (RTXDI).

3. NVIDIA Real-Time Denoisers.

- Attribute Node (Uses always the active vertex color, "Type" is always "Geometry")

- Color Attribute Node (Uses always the active vertex color layer)

- Geometry Node (no "Pointiness", no "Random per Island")

- Glossy BSDF (Distribution "GGX" only)

- Light Path Node (only Camera/Shadow Ray, no Depths, no Length)

- Object Info Node (only outputs "Location" and "Random")

- Particle Info Node (only outputs "Location" and "Random")

- Principled BSDF (unsupported: Subsurface, Specular, Anisotropic, Sheen, Clearcoat, IOR, Transmission. Distribution "GGX" only)

- Refraction BSDF (rendered as transparent, with stochastic alpha)

- Texture Coordinate Node (only output "UV")

- Translucent BSDF (rendered with stochastic alpha)

- Transparent BSDF (rendered with stochastic alpha)

- UV Map Node (only active UV map)

- AOV Output Node

- Ambient Occlusion

- Bevel Node

- Curves Info Node

- Displacement Node

- Hair BSDF

- Point Density Node

- Point Info

- Principled Hair BSDF

- Principled Volume

- Script Node

- Shader To RGB

- Subsurface Scattering

- Toon BSDF

- Velvet BSDF

- Volume Absorption

- Volume Info Node

- Volume Scatter